Automatic differentiation tools like PyTorch can compute gradient penalties for Wasserstein GANs (WGAN-GP) by differentiating the discriminator output with respect to the input.

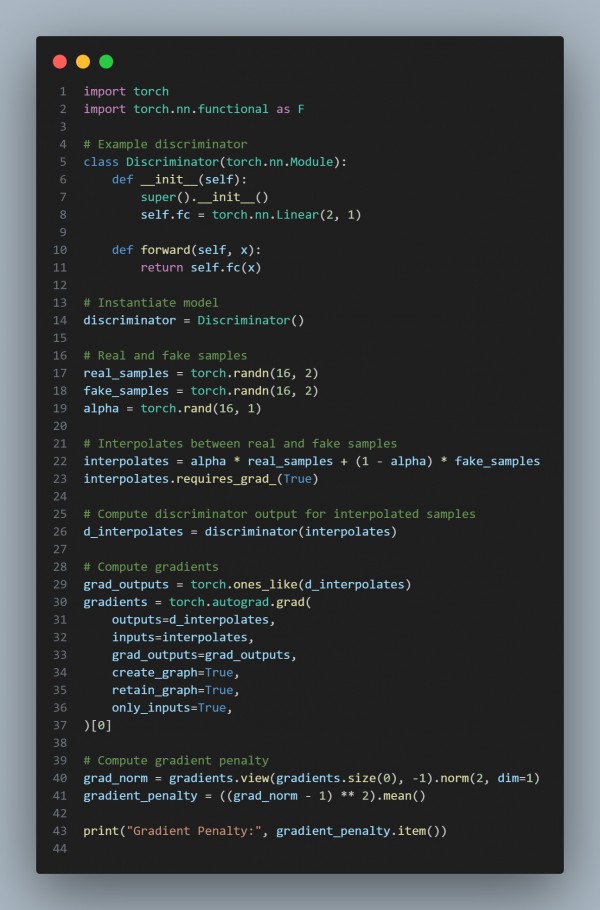

Here is the code snippet you can refer to:

In the above code, we are using the following steps:

- Interpolation: Mix real and fake samples.

- torch.autograd.grad: Compute gradients of the discriminator's output w.r.t. interpolates.

- Gradient Penalty: Penalize deviations from unit norm in the gradient.

Hence, by referring to the above, you can use automatic differentiation tools for gradient penalty in Wasserstein Generative Adversarial Networks

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP