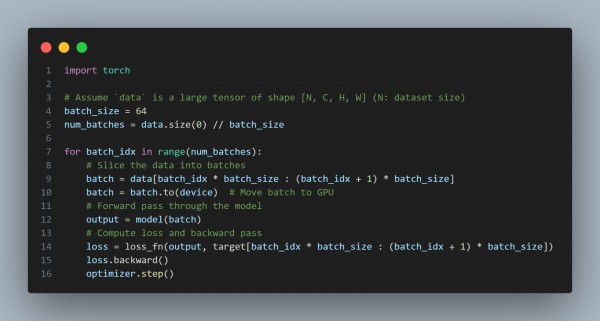

You can use tensor slicing to load and process smaller batches of data from a large dataset in memory-efficient chunks, speeding up training by reducing memory overhead. Here is the code you can refer to :

In the above code, we are using the following:

- Efficiency: Avoids loading the entire dataset into memory.

- Scalability: Handles larger datasets by working on slices.

- Flexibility: Enables dynamic memory usage for large-scale generative AI models.

Hence, referring to the above, you can use tensor slicing to speed up training on large datasets for Generative AI

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP