You can preprocess large datasets for generative AI tasks using Dask, which enables parallel and distributed data processing. Dask's DataFrame or Bag APIs handle large-scale data efficiently by splitting computations across multiple cores or machines.

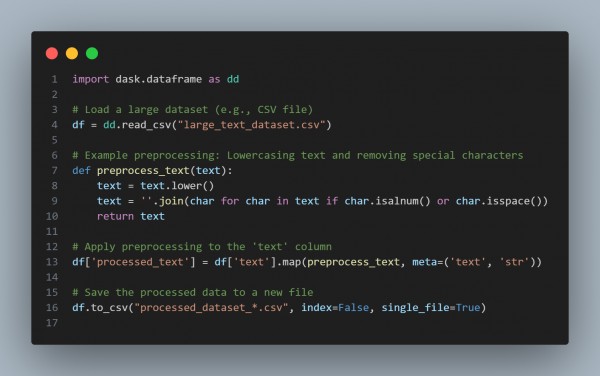

Here is the code snippet which you can refer to:

In the above code, we are using the following:

-

Dask DataFrame:

- Works like Pandas but processes data in chunks to handle datasets larger than memory.

-

Preprocessing Function:

- Define custom preprocessing (e.g., cleaning text, tokenization, or transformation).

-

Parallel Execution:

- Operations like maps are applied in parallel across the dataset.

The Output would be:

- Save the preprocessed data back to disk in a scalable manner.

Hence, this approach is efficient for preparing datasets for tasks like training generative.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP