You can enhance generative outputs in transformers by integrating recurrent layers, such as LSTMs or GRUs, to model sequential dependencies effectively.

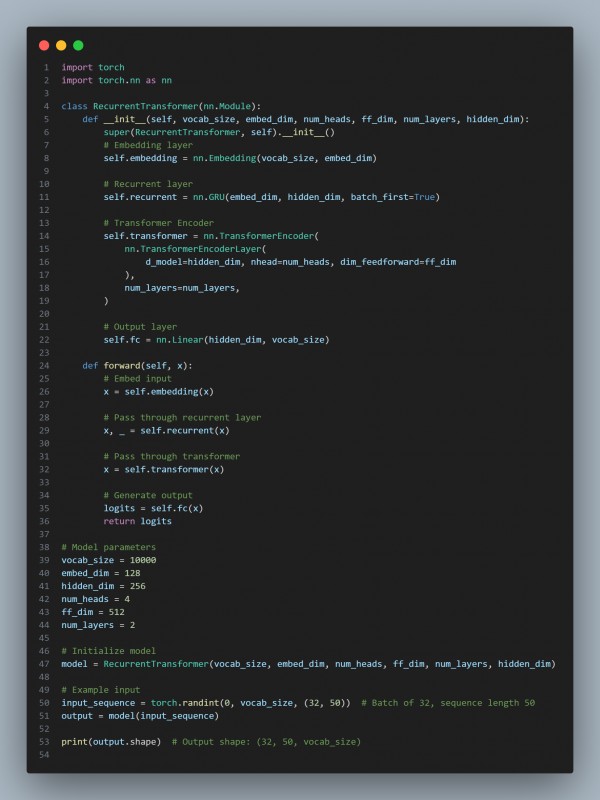

Here is the code snippet you can refer to:

In the above code, we are using the following:

- Recurrent Layer: Captures sequential context before feeding into the transformer for better dependency modeling.

- Transformer Encoder: Enhances understanding of global contexts using self-attention.

- Hybrid Approach: Combines benefits of recurrence (local dependencies) and transformers (global context) for improved generative outputs.

Hence, you can use recurrent layers in transformers for better generative outputs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP