To debias language models without affecting their performance, common techniques are as follows:

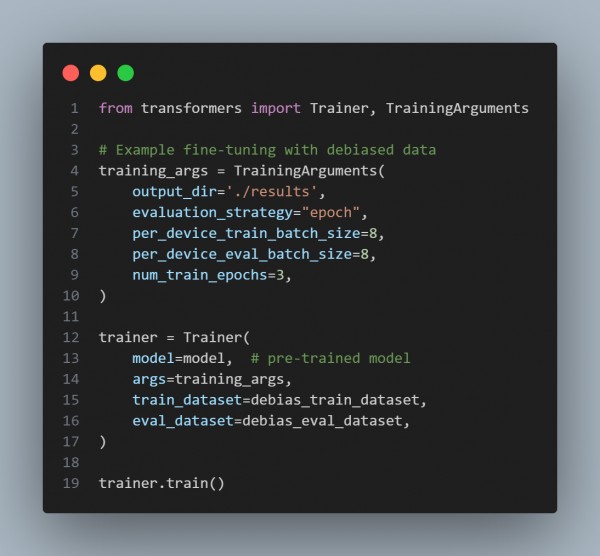

- Bias Mitigation via Fine-Tuning: Fine-tune the model on a debiased dataset or use adversarial training to reduce bias

Here is the code snippet you can refer to:

.

-

Counterfactual Data Augmentation: Train the model with counterfactual examples (e.g., swapping gendered terms).

-

Bias Detection and Mitigation Layers: Implement layers that flag and adjust biased predictions post-hoc.

-

Regularization: Use techniques like adversarial regularization to minimize bias during training.

Hence, these methods aim to preserve model accuracy while reducing biased outputs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP