Self-supervised learning (SSL) improves the efficiency of Generative AI in unsupervised domains by using large amounts of unlabeled data to learn meaningful representations.

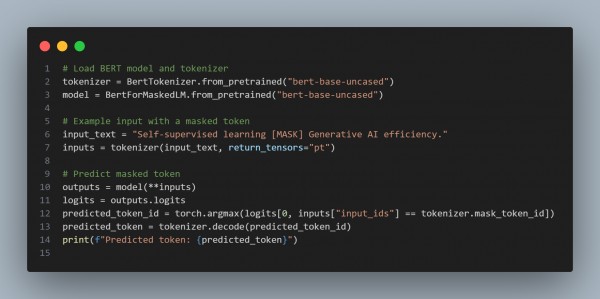

Here are the code snippets you can refer to:

- Label-Free Training: Leverages unlabeled data with proxy tasks (e.g., predicting masked inputs).

- Rich Representations: Learns high-quality features that improve downstream generative tasks.

- Data Efficiency: Maximizes the use of available data, especially in domains with limited supervision.

Here is the code snippets you can refer to:

In the above code, we are using the following key points:

- Proxy Tasks: Tasks like masking or contrastive learning enable SSL to extract meaningful patterns.

- Unsupervised Domains: SSL can pre-train models effectively on unlabeled data, improving efficiency in unsupervised contexts.

- Generalization: Rich representations learned through SSL enhance performance on various generative tasks.

Hence, by employing SSL, Generative AI systems become more data-efficient and better suited for unsupervised domains, enhancing their overall capability.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP