You can optimize inference speed for generative tasks using Hugging Face Accelerate by leveraging mixed precision, model parallelism, and optimized device allocation. Here are the key steps you can follow:

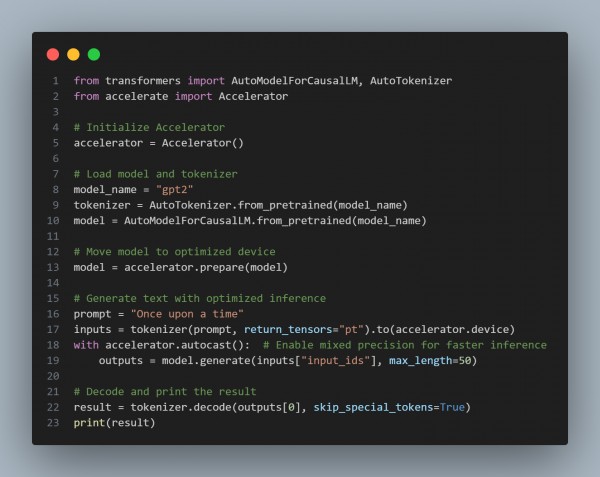

Here is the code for the above steps:

In the above code, we are using the following key points:

- Accelerator.prepare: Automatically distributes the model across available devices.

- accelerator.autocast: Enables mixed precision for faster computation.

- Efficient use of GPU/TPU via Hugging Face Accelerate.

Hence, this setup significantly improves inference speed while minimizing hardware constraints.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP