You can adapt Hugging Face's T5 model for abstractive summarization by fine-tuning it with summarization-specific data or directly using it for inference with appropriate prompts.

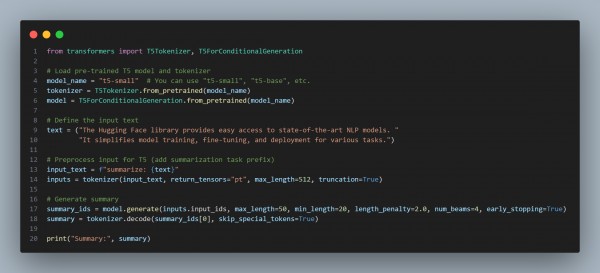

Here is the code snippet you can refer to:

In the above code, we are using the following:

- Task Prefix: Add the prefix "summarize:" to the input text for task-specific adaptation.

- Preprocessing: Tokenize the input text and truncate it to fit the model's max length.

- Inference: Use the generate() method with beam search or other decoding strategies for high-quality summaries.

Hence, this approach leverages T5's versatility for abstractive summarization without additional fine-tuning.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP