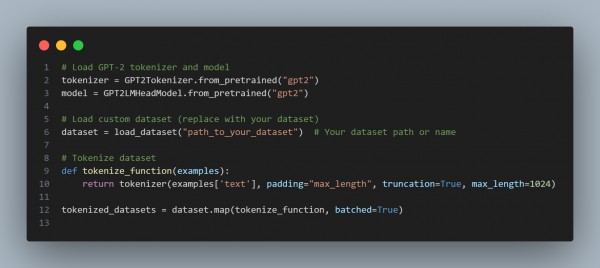

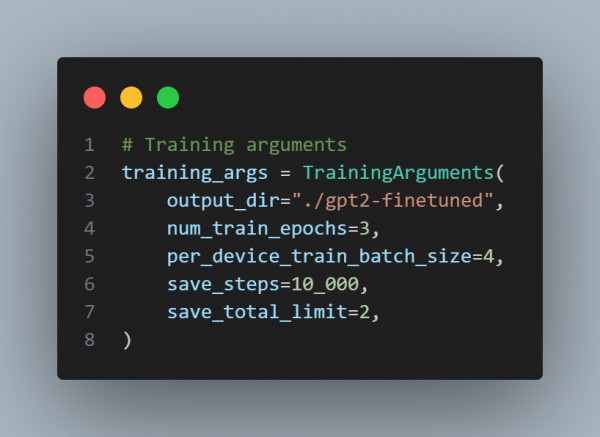

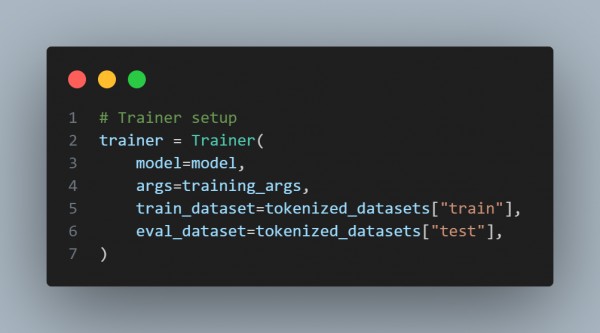

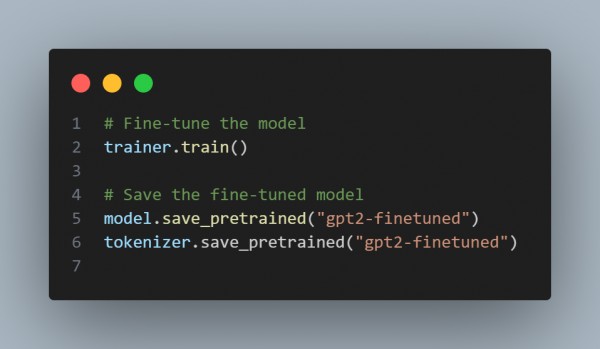

You can fine-tune a GPT-2 model using a custom dataset for long text generation by referring to the following:

In the above code we are using Dataset that loads and tokenize a custom dataset for long text generation, Training which uses the Trainer from Hugging Face for managing the fine-tuning process and Model and Tokenizer which fine-tunes GPT-2 using the custom dataset and save the results.

Hence, this approach helps fine-tune GPT-2 to generate long texts relevant to your custom dataset.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP