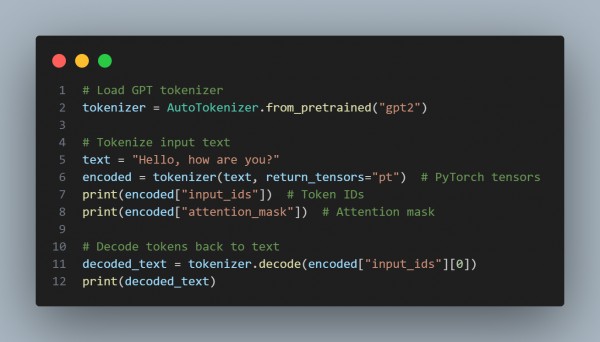

In order to implement tokenization using Hugging Face's AutoTokenizer for a GPT model, you can refer to the code snippet below:

In the above code, we are using Tokenizer Initialization, which uses AutoTokenizer.from_pretrained with a GPT model (e.g., gpt2), Tokenization, where the tokenizer method converts text into token IDs and optional attention masks, and Decoding, which uses the tokenizer to decode and convert token IDs back to human-readable text.

Hence, using the reference from above, you can implement tokenization using Hugging Face's AutoTokenizer for a GPT model.

Related Post: Tokenizing long text sequences for GPT models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP