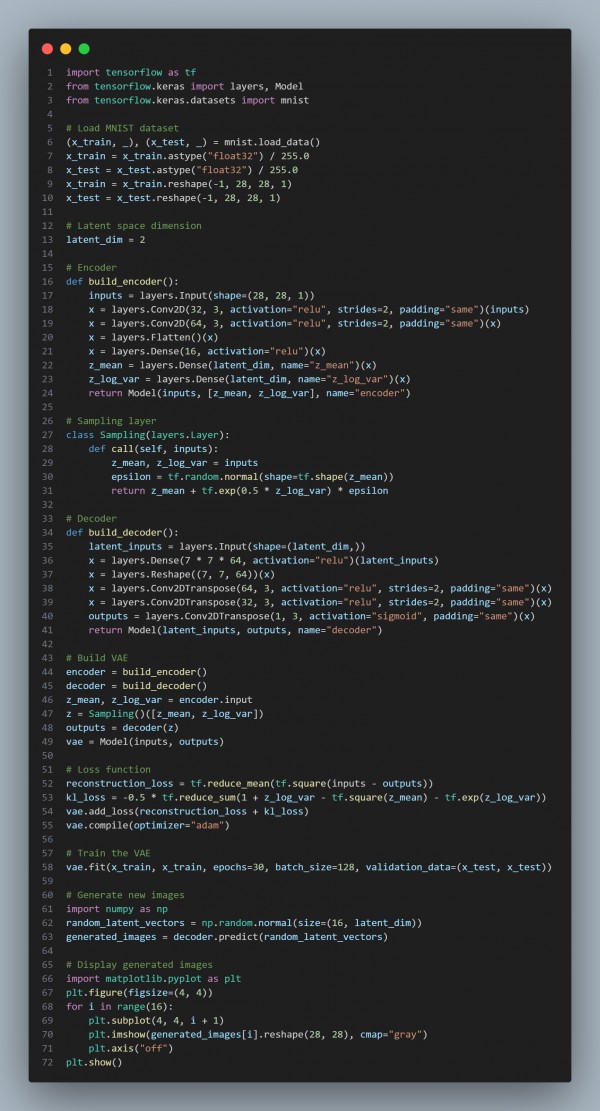

You can refer to the code snippet below showing a concise implementation of a Variational Autoencoder (VAE) to generate images from the MNIST dataset using TensorFlow/Keras:

In the above code, we are using the following approaches:

- Encoder: Maps input images to latent space (z_mean, z_log_var).

- Sampling Layer: Samples latent vectors using the reparameterization trick.

- Decoder: Reconstructs images from latent vectors.

- VAE Loss: Combines reconstruction loss and KL divergence.

- Training: Trains on MNIST and generates new images.

Hence, by referring to the above, you can write code for a Variational Autoencoder to generate images from the MNIST dataset.

Generative AI enables machines to generate realistic content by analyzing data. A Generative AI certification equips learners with expertise in deep learning, neural networks, and AI-driven innovation, opening doors to advanced career opportunities in artificial intelligence.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP