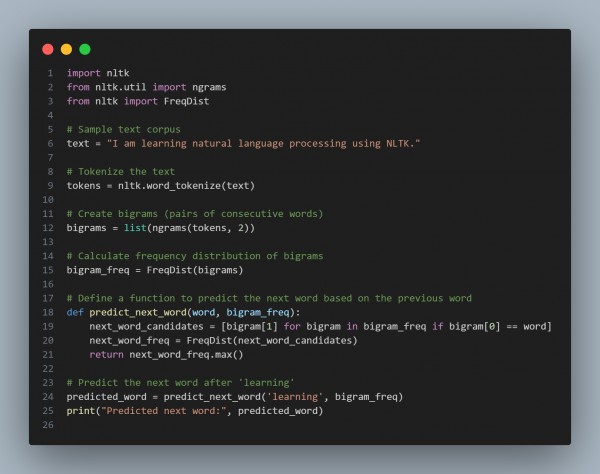

To extract the most probable next word for text prediction tasks using NLTK, you can use an N-gram model. By training a bigram or trigram model, you can calculate the probability of the next word based on the previous word(s). Here is the code snippet showing how:

In the above code snippet, we are using the following techniques:

- Tokenize the Text: Break the text into tokens using word_tokenize.

- Create N-grams: Generate bigrams or trigrams using ngrams.

- Frequency Distribution: Calculate the frequency of these N-grams to understand the probability of word pairs.

- Prediction: Given a word (e.g., 'learning'), predict the next word by checking which word most frequently follows it in the corpus.

Hence, this simple N-gram model can predict the most probable next word based on historical data, but more advanced models like LSTM or Transformer-based models offer improved accuracy and context-awareness.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP