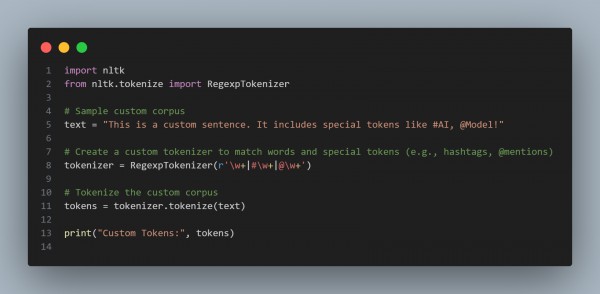

To create custom tokenizers for a specific corpus using NLTK, you can subclass nltk.tokenize.RegexpTokenizer or create a custom tokenizer by defining rules for your text data. Here is the code snippet you can refer to:

In the above code, we are using the following approaches:

- Define a Regular Expression: Use regular expressions to specify how tokens should be identified (e.g., words, hashtags, @mentions).

- Tokenize Custom Corpus: Apply the custom tokenizer to your corpus, which will respect your tokenization rules.

- Handle Special Cases: You can adjust the regular expression to handle specific requirements for your corpus (e.g., splitting on punctuation, recognizing domain-specific terms).

Hence, this approach helps you design tokenizers that fit the structure of specialized text data, improving your text processing pipeline for tasks like text generation or NLP.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP