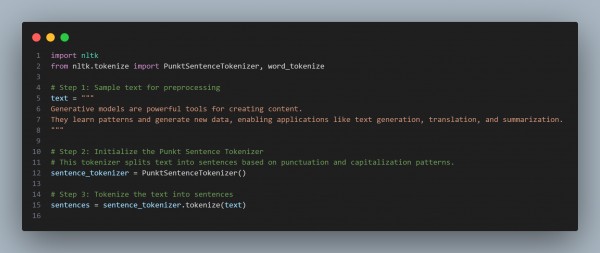

To preprocess data for text generation using NLTK's Punkt tokenizer, you can break down text into sentences or words, which helps in handling the text efficiently for training generative models. Here is the code snippet you can refer to:

In the above code, we are following the steps:

- Tokenize Sentences: Use PunktSentenceTokenizer to break the text into sentences.

- Tokenize Words: Apply word_tokenize to split each sentence into words.

- Text Preprocessing: This prepares the text for further analysis, like training generative models, where you need structured sentence or word-level input.

Hence, this tokenization approach allows you to feed structured data (like tokenized sentences or words) into your model for text generation tasks such as training a Recurrent Neural Network (RNN) or Transformer-based model.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP