Cross-attention mechanisms improve multi-modal generative AI tasks, such as text-to-image generation, by aligning information between modalities (e.g., text and image).

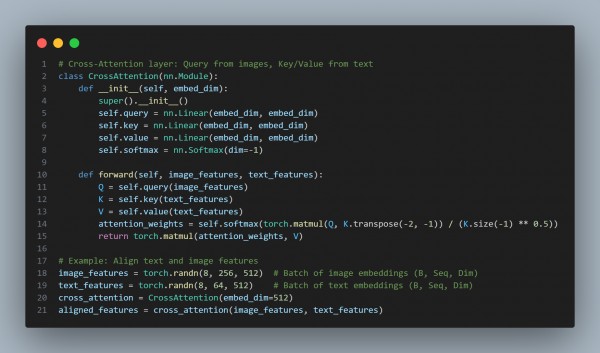

Here is the code snippet you can refer to:

In the above code, the influence of cross-attention is on Text-Image Alignment, which ensures generated images accurately represent text descriptions, Improved Coherence to model focus on keywords while generating visual elements, and Multi-Modal Fusion, which bridges modality gaps, enhancing semantic understanding.

This is how cross-attention mechanisms influence performance in multi-modal generative AI tasks like text-to-image generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP