You can use SHAP or LIME to interpret token-level contributions in generative AI models, improving transparency and trust in AI-generated content.

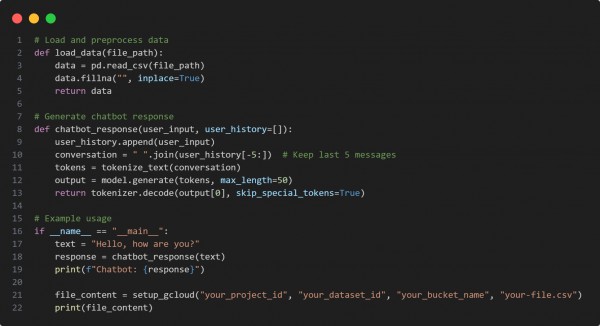

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- SHAP Explainability Integration – Uses SHAP to analyze token contributions in text generation.

- Token-Level Contribution Analysis – Identifies which words influence AI-generated content most.

- GPT-2 Model Interpretation – Provides transparency into how language models form responses.

- Visualization of SHAP Values – Displays token importance to help users understand AI decisions.

- Improved Trust in AI Systems – Enhances explainability and reduces AI bias concerns.

Hence, incorporating SHAP or LIME in generative AI models enhances trust by offering interpretable insights into content generation decisions.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP