You can use TensorFlow's tf.keras.preprocessing.text.Tokenizer to tokenize text for generative AI by fitting the tokenizer on a corpus and converting the text into sequences.

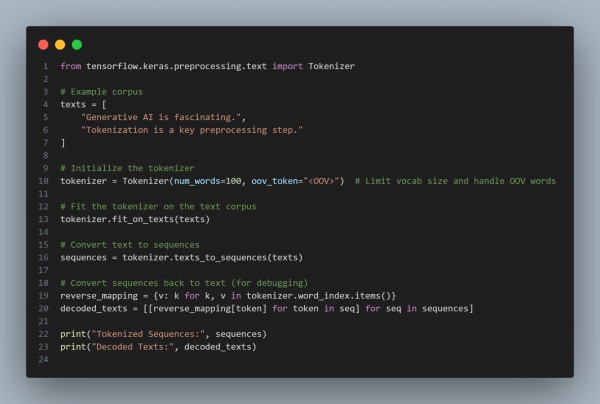

Here is the code snippet you can refer to:

In the above code, we are using the following key approaches:

- Tokenizer Initialization: Configure vocab size and OOV token.

- Fit on Text: Learn word-to-index mappings from the corpus.

- Text-to-Sequences: Convert text to numerical tokens for model input.

Hence, by referring to the above, you can use TensorFlow s tf keras preprocessing for tokenizing text in generative AI.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP