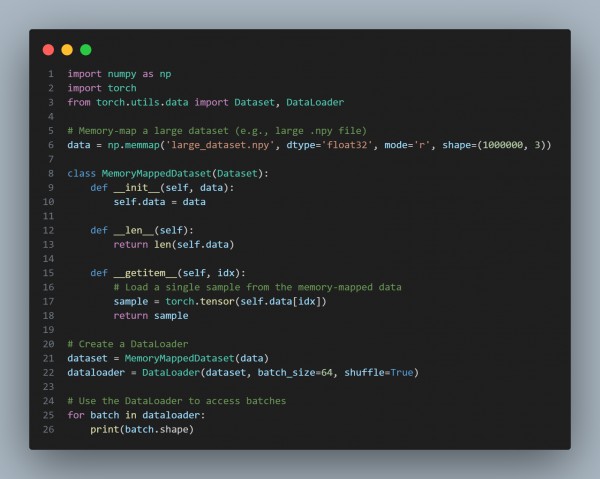

You can use memory-mapped files to efficiently load and handle large datasets in PyTorch by referring to below:

\

\

In the code above, we are using memory mapping, in which the (np. mem map)function maps the file into memory. The data is not fully loaded into RAM but can be accessed efficiently without excessive memory usage, Dataset class to custom MemoryMappedDataset class inherits from torch.utils.data.Dataset, allowing it to work seamlessly with a DataLoader and DataLoader to efficiently load batches of data during training without loading the entire dataset into memory at once.

Hence, the above strategies can be used to efficiently load and handle large datasets in PyTorch using memory-mapped files.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP