You can use the following techniques to handle gradient accumulation to train large models on smaller GPUs.

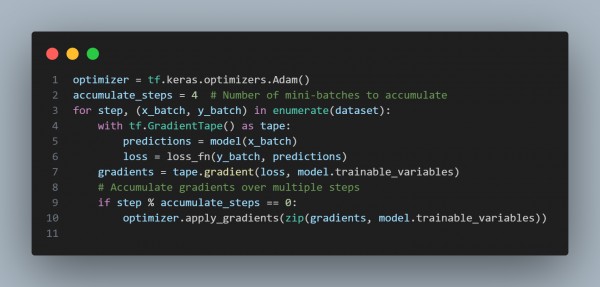

- Manual Gradient Accumulation: You can accumulate gradients over multiple mini-batches before updating model weights, effectively simulating a larger batch size.

- You can refer to the below code on the usage of manual gradient accumulation.

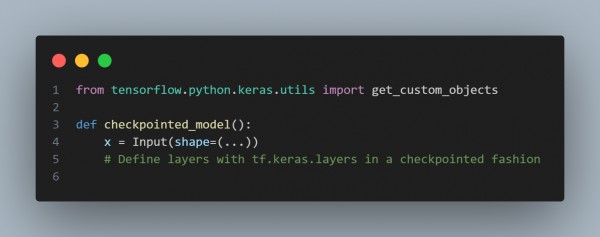

- Gradient Checkpointing: You can also save memory by only storing essential parts of the model during forward passes and recomputing others during backpropagation.

- You can refer to the code below on the usage of manual Gradient Checkpointing.

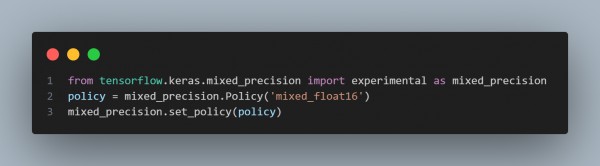

- Mixed Precision Training: Lower-precision data types (e.g., float16 instead of float32) reduce memory usage and speed up computation.

- You can refer to the code below on the usage of Mixed Precision Training.

Hence, by using techniques like Manual Gradient Accumulation, Gradient Checkpointing, and Mixed Precision Training, you can handle gradient accumulation to train large models on smaller GPUs.

Related Post: How to reduce training time for large language models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP