You can quantize an LLM for deployment on a Raspberry Pi by leveraging torch.quantization to reduce model size and improve inference speed.

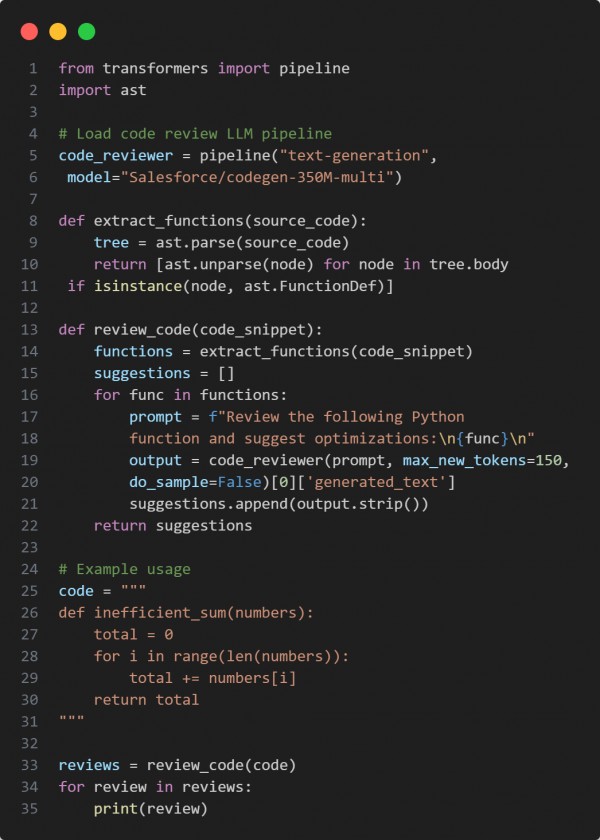

Here is the code snippet below:

In the above code, we are using the following key points:

-

Dynamic quantization: Applies quantization to the linear layers of the model for memory and speed optimization

-

Hugging Face Transformers: Loads a pre-trained language model and tokenizer

-

Saving quantized models: The quantized model is saved for future use in a resource-constrained environment

Hence, this script efficiently quantizes an LLM, making it suitable for deployment in resource-constrained environments like the Raspberry Pi.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP