You can use LoRA (Low-Rank Adaptation) to fine-tune a large model efficiently by updating only small, low-rank matrices instead of the full model, reducing computational and memory overhead.

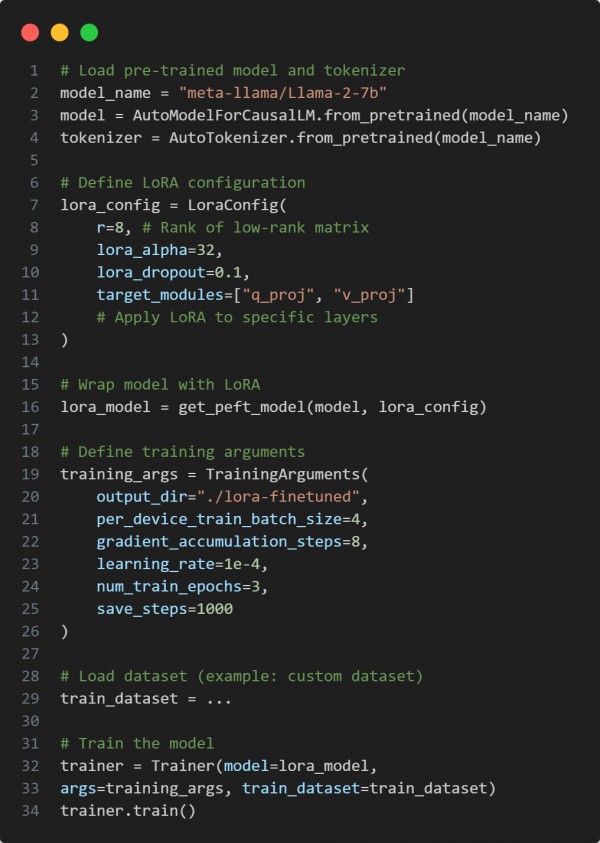

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Uses LoRA to fine-tune only a few parameters, making training efficient

- Reduces GPU memory usage by updating small low-rank matrices

- Applies LoRA only to specific layers (query & value projections)

- Maintains the generalization ability of the base model

Hence, LoRA enables efficient fine-tuning of large models on limited resources by updating only a subset of trainable parameters.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP