To prevent output collapse in a VAE (Variational Autoencoder) when generating data with varying characteristics, you can refer to the following key strategies:

- KL Divergence Weighting: Increase the weight of the KL divergence term gradually during training to ensure the latent space is well-structured.

- Beta-VAE: Use the Beta-VAE modification to control the trade-off between reconstruction loss and the KL divergence, forcing the model to learn more disentangled representations.

- Data Augmentation: Enhance the diversity of the dataset by using techniques like random cropping, flipping, or color jittering to encourage varied output.

- Latent Space Regularization: Apply constraints like Gaussian priors or use regularization methods (e.g., InfoVAE) to ensure diversity in the latent space.

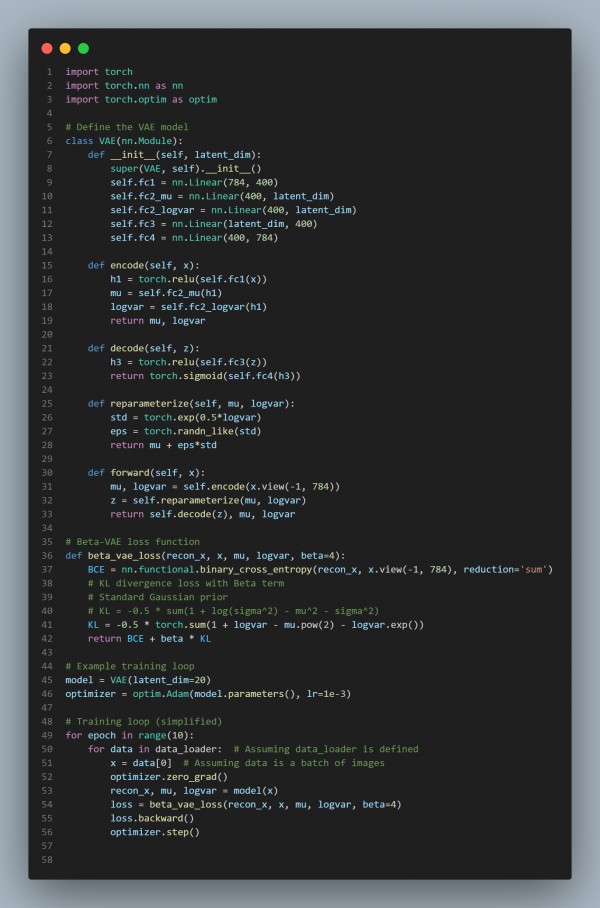

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- KL Divergence Weighting: Adjusts the balance between reconstruction and latent space regularization.

- Beta-VAE: Forces the model to learn more disentangled latent representations by controlling the KL term.

- Latent Space Regularization: Encourages diversity in the latent space.

- Data Augmentation: Increases the diversity of input data, leading to varied output generation.

Hence, by referring to the above, you can prevent output collapse when using a VAE model to generate data with varying characteristics.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP