Length truncation in long-form text generation can be resolved by using techniques like sliding window attention, chunked input processing, extending the model’s max length, and leveraging models optimized for long sequences (like Longformer or GPT-3).

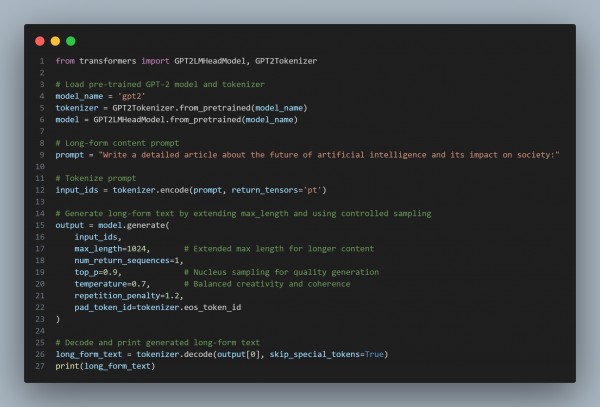

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Extends the max_length parameter to handle longer text outputs.

- Uses nucleus sampling (top_p) and temperature control for quality and coherence.

- Applies a repetition penalty to prevent redundant or circular content.

Hence, by adjusting model parameters and managing sampling techniques, we effectively address length truncation and generate coherent, detailed long-form text.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP