You can use L1 regularization with LogisticRegression in Scikit-learn to perform feature selection by shrinking some feature coefficients to zero.

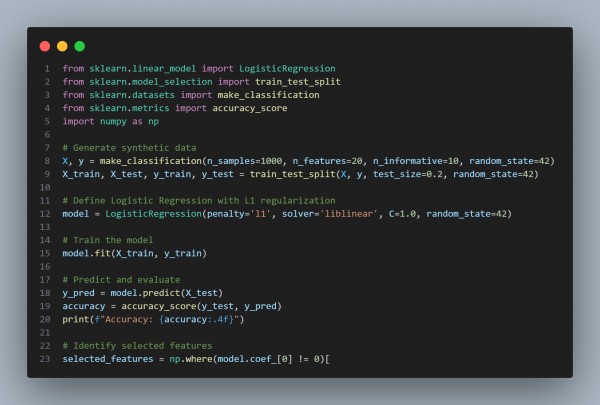

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- penalty='l1' applies L1 regularization, which encourages sparsity in the feature weights.

- solver='liblinear' supports L1 regularization for logistic regression.

- np.where(model.coef_[0] != 0) identifies non-zero coefficients, indicating selected features.

- accuracy_score() evaluates model performance after feature selection.

Hence, L1 regularization helps select the most important features by reducing irrelevant feature weights to zero, improving model interpretability and efficiency.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP