Deploy a Keras model using a Flask API with TensorFlow Serving and monitor performance using Prometheus and Grafana.

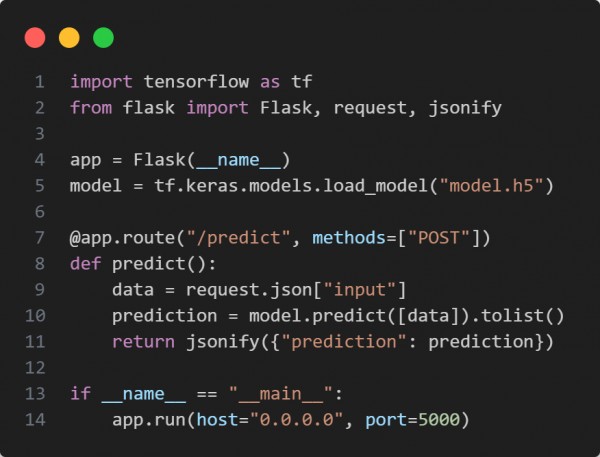

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Loads a pre-trained Keras model (model.h5).

- Sets up a simple Flask API to serve predictions.

- Accepts JSON input and returns predictions.

- Runs the server on 0.0.0.0:5000 for accessibility.

Hence, by using Flask to serve predictions and monitoring tools like Prometheus and Grafana, we ensure a scalable and well-observed Keras model deployment in production.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP