To generate realistic images from textual descriptions, use DALL·E (text-to-image generation) or CLIP with a diffusion model by inputting a descriptive prompt, fine-tuning with prompt engineering, and optionally guiding generation with CLIP-based feedback for better alignment.

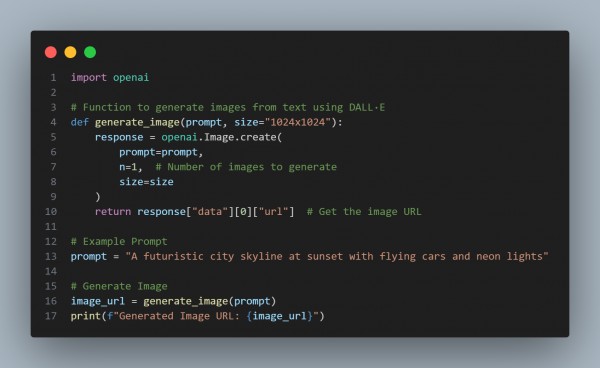

Here is the code snippet you can refer to:

In the above code we are using the following key approaches:

-

Uses OpenAI’s DALL·E API for High-Quality Image Generation:

- Transforms textual descriptions into realistic images using a pretrained diffusion model.

-

Customizable Prompt Engineering for Better Outputs:

- Detailed prompts (e.g., lighting, atmosphere, artistic style) improve realism.

-

Flexible Image Size Selection (1024x1024, 512x512, etc.):

- Supports various resolutions for different use cases.

-

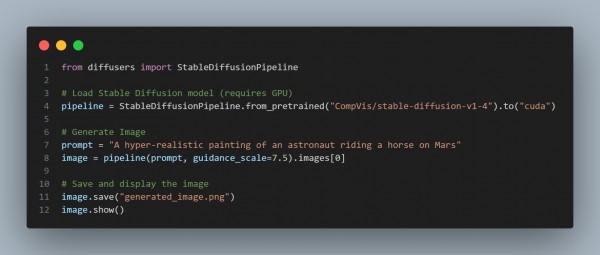

Optimizing Image Generation with CLIP (Optional):

- Combine CLIP with a diffusion model (Stable Diffusion + CLIP guidance) to ensure the generated image closely matches the text prompt.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP