To improve coherence in long document processing with a transformer, use a memory-augmented transformer, sliding window attention, or fine-tune with contrastive coherence loss.

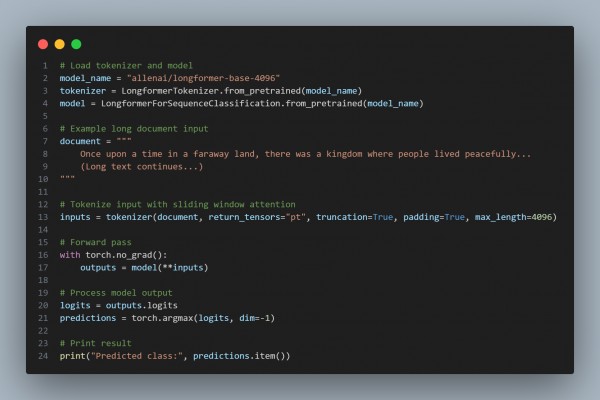

Here is the code snippet you can refer to:

In the above, we are using the following key points:

- Model Selection: Uses Longformer, which supports efficient processing of long documents.

- Sliding Window Attention: Ensures attention mechanism scales to long inputs without loss of coherence.

- Tokenization: Applies LongformerTokenizer with proper truncation and padding.

- Inference Step: Processes the document using the transformer and extracts predictions.

- Handling Output: Converts model output logits into a meaningful class prediction.

Conclusion:

Hence, by employing a memory-augmented transformer like Longformer with sliding window attention, we significantly improve coherence and grammatical accuracy when generating text from long document inputs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP