To address discriminator overpowering in GANs, apply one-sided label smoothing, reduce discriminator learning rate, use gradient penalty (WGAN-GP), or introduce spectral normalization in the discriminator.

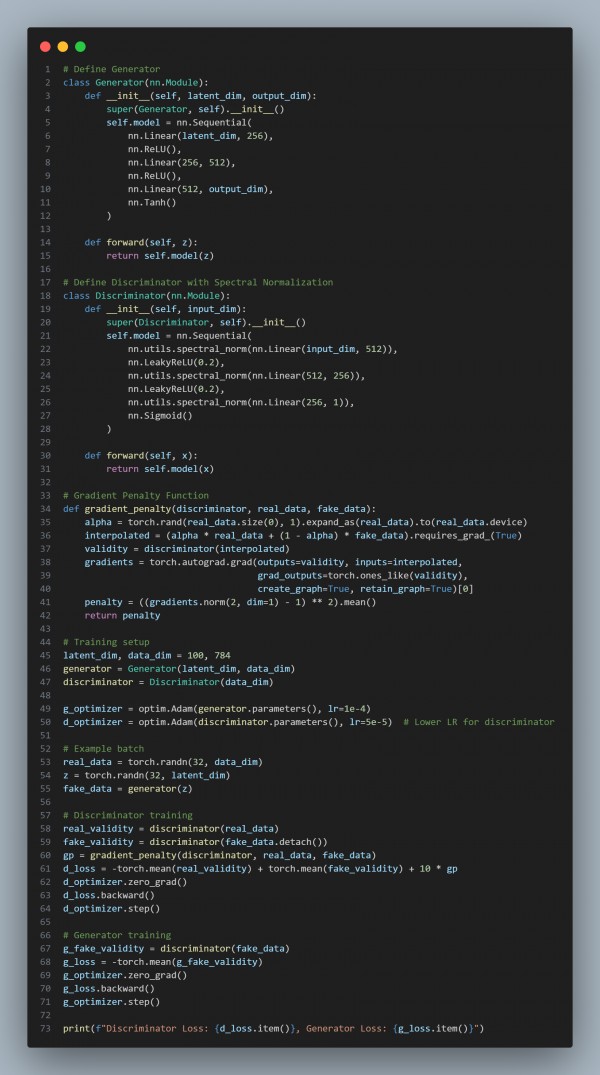

Here is the code snippet you can refer to:

In the above, we are using the following key points:

- Spectral Normalization: Stabilizes the discriminator by constraining weight magnitudes.

- Lower Discriminator Learning Rate: Prevents the discriminator from learning too fast, ensuring balanced updates.

- Gradient Penalty (WGAN-GP): Ensures stable training by penalizing large gradients.

- Leaky ReLU in Discriminator: Prevents dying neuron issues by allowing small negative gradients.

- Balanced Training: Alternates training steps between generator and discriminator to maintain equilibrium.

Hence, by incorporating spectral normalization, adjusting learning rates, and applying gradient penalty, we mitigate discriminator dominance and stabilize GAN training for more robust text or image generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP