Dropout can help reduce variance in GAN-generated images by randomly deactivating neurons during training, which promotes generalization.

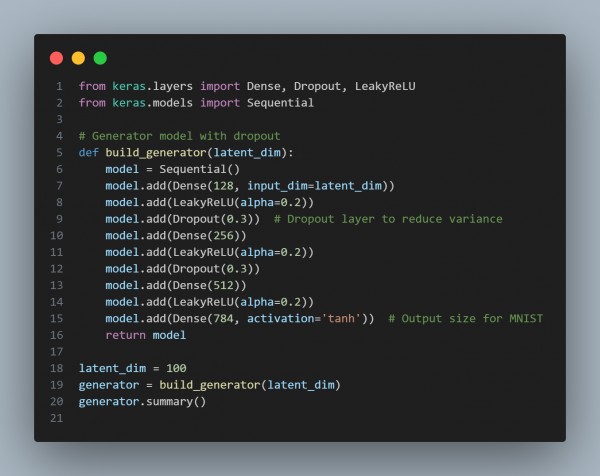

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Dropout: Reduces overfitting by deactivating neurons randomly.

- Latent Input: Accepts random noise for image generation.

- LeakyReLU: Prevents dead neurons and improves gradient flow.

- Tanh Output: Scales output to [-1, 1] for pixel values.

- Customizable: Easily adaptable architecture for specific tasks.

Hence, by referring to above, you can reduce variance in GAN-generated images using dropout techniques.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP