A major issue with Generative Adversarial Networks (GANs) is mode collapse, where the generator fails to capture the diversity of the training data by producing limited and repeated outputs. Here's how to deal with this problem:

Employ Better GAN Structures

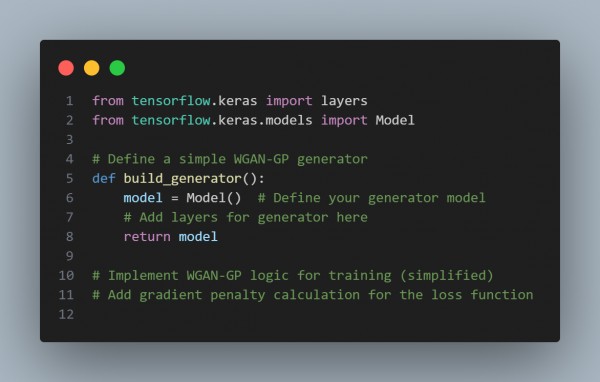

To lessen mode collapse, use sophisticated GAN variants such as Wasserstein GAN (WGAN) or WGAN-GP. These topologies encourage more balanced learning between the discriminator and generator while stabilizing training.

Make the Discriminator Noisier

The discriminator can avoid overfitting to certain generator outputs and improve its generalization by adding noise to its input.

Discrimination in Mini-batch

The discriminator network may detect mode collapse by comparing the diversity of generated samples within a mini-batch when mini-batch discrimination is used.

For instance, adding a simplified mini-batch discrimination layer

Matching Features

By using a feature-matching loss, instead of explicitly trying to trick the discriminator, the generator attempts to match the statistics of the features that are extracted by an intermediary layer of the discriminator. This incentivizes the generator to capture the data's diversity.

Use Various Training Methods

Applying spectral normalization to the discriminator's layers will stabilize the training process.

Orthogonal Regularization: To keep outputs diverse, make sure generator weights are orthogonal.

By using these techniques you can reduce model collapse in GAN training.

Related Post: How to prevent mode collapse during the training of GANs

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP