To handle mode collapse when training a GAN on highly imbalanced image datasets with noisy labels, you can follow the following strategies:

- Class-conditioned GAN: You can add class labels to the generator and discriminator to guide the model toward generating diverse samples for each class, reducing mode collapse.

- Wasserstein GAN with Gradient Penalty (WGAN-GP): You can use WGAN-GP, which offers more stable training and helps mitigate mode collapse by enforcing a smoother loss landscape.

- Label Smoothing: Apply label smoothing to deal with noisy labels by softening the target labels for real and fake classes.

- Data Augmentation: Apply augmentation to the minority class to balance the dataset and encourage the generator to produce more diverse outputs.

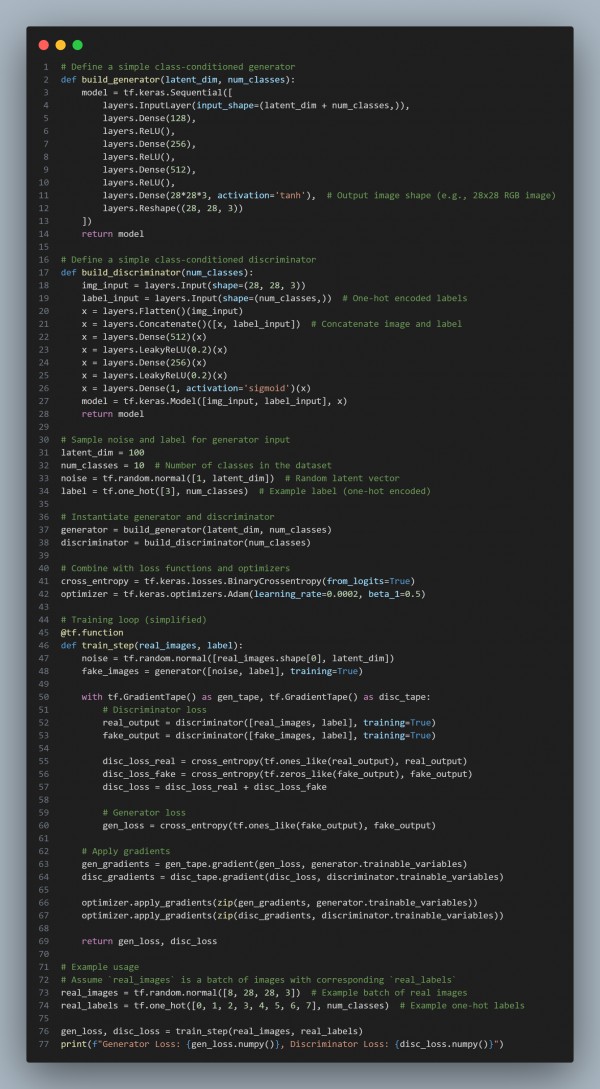

Here is the code snippet you can refer to:

In the above code, we are using the following key strategies:

- Class-Conditioned GAN: Use class labels as input to both the generator and discriminator to ensure diverse outputs for each class.

- WGAN-GP: Use Wasserstein loss with gradient penalty to stabilize training.

- Label Smoothing: Reduce noise by softening the target labels during training.

- Data Augmentation: Augment minority class data to help the generator produce more diverse outputs.

Hence, these methods collectively help mitigate mode collapse and improve the diversity of the generated images, especially in cases with imbalanced datasets or noisy labels.

Related Post: Mode collapse in GAN training

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP