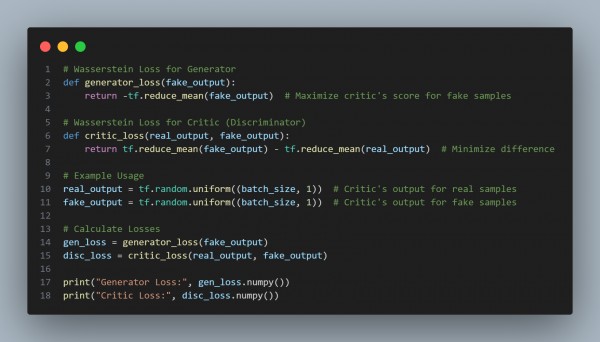

To implement Wasserstein loss in TensorFlow for Wasserstein GANs (WGANs), you compute the loss as the difference between real and fake scores from the discriminator (critic). Here is the code you can refer to:

In the above code, we are using the following:

- Generator Loss: Maximize the critic's score for fake samples by minimizing −E[D(G(z))]-\mathbb{E}[D(G(z))]−E[D(G(z))].

- Critic Loss: Minimize the difference E[D(G(z))]−E[D(x)]\mathbb{E}[D(G(z))] - \mathbb{E}[D(x)]E[D(G(z))]−E[D(x)], where DDD is the critic and G(z)G(z)G(z) is the generator's output.

- Gradient Penalty: For WGAN-GP, include a gradient penalty term to enforce the Lipschitz constraint.

Hence, this implementation assumes the use of Wasserstein loss in a basic WGAN. For WGAN-GP, add the gradient penalty to the critic's loss.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP