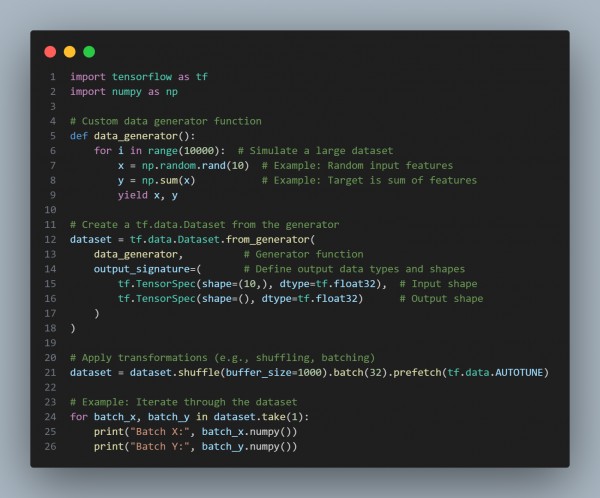

In order to build a custom data generator for large datasets in TensorFlow, you can create a Python generator function and wrap it with tf.data.Dataset.from_generator. This is especially useful for handling large datasets that cannot fit into memory. Here is the code snippet you can refer to:

In the above code, we are using the following:

- Define a Generator Function: Use yield to stream data one sample at a time.

- Wrap with tf.data.Dataset.from_generator: Specify output types and shapes using output_signature.

- Apply Optimizations: Shuffle, batch, and prefetch data to improve performance.

Hence, this approach allows efficient data loading for large datasets, ensuring scalability and flexibility.

Related Post: GPT models on custom datasets

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP