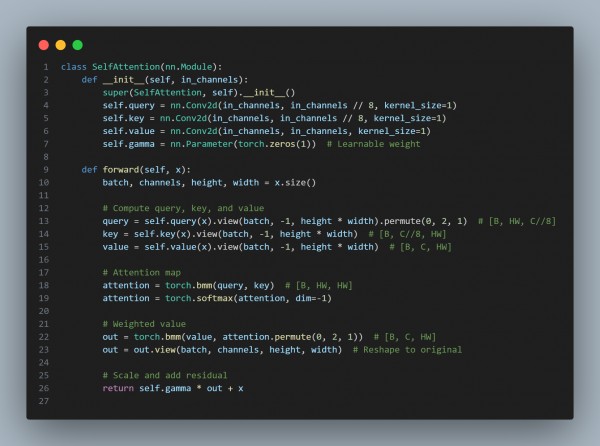

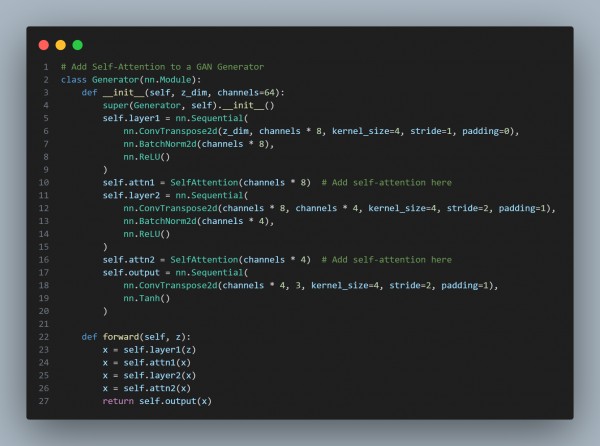

To implement self-attention layers in GANs for generating high-quality images with fine details, you add a Self-Attention Module (e.g., SAGAN-style) to capture long-range dependencies and enhance detail generation. Here is the code you can refer to:

In the above code, we using the following:

-

Self-Attention Module:

- Captures global dependencies and spatial relationships in the image.

- Enhances fine details and overall coherence.

-

Placement:

- Add self-attention layers at multiple resolutions within the generator.

-

Gamma Parameter:

- Controls the influence of attention. Initialized to 0 for residual learning.

-

GAN Improvements:

- Boosts high-quality detail generation in datasets with complex textures or diverse features.

Hence, you can implement self-attention layers in GANs to generate high-quality images with fine details.

Related Post: How to handle model drift in production environments

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP