To apply novel loss functions in GANs to improve the quality of generated text or images, you can follow the following approaches:

- Perceptual Loss: Measures the difference in high-level features (e.g., from a pre-trained network) rather than pixel-level differences, improving perceptual quality.

- Feature Matching Loss: Ensures that the generated output matches real data in terms of feature space.

- Wasserstein Loss: Uses the Wasserstein distance (Earth Mover’s distance) to improve training stability and reduce mode collapse.

- Adversarial Loss with Gradient Penalty: Helps with stable training by penalizing gradients that are too steep in Wasserstein GANs (WGAN-GP).

- Cycle Consistency Loss: For tasks like image-to-image translation, it ensures that the model can map the generated data back to the original form.

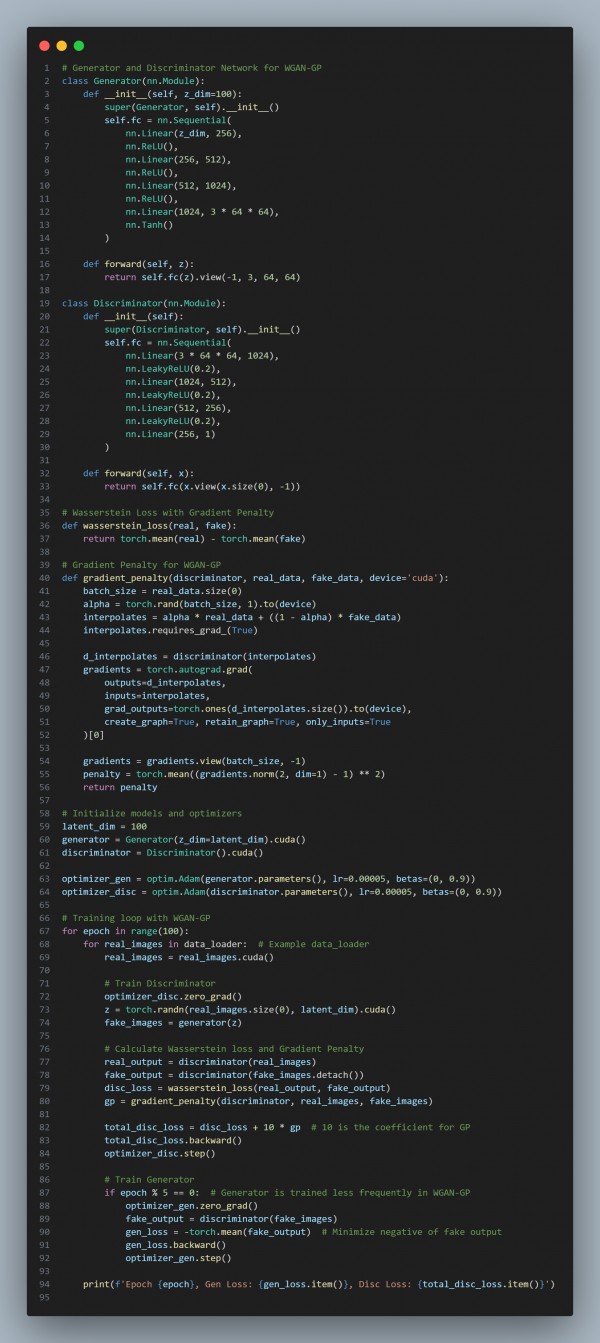

Here are the code snippets you can refer to:

In the above code, we are using the following key points:

- Wasserstein Loss: Reduces mode collapse and improves stability by measuring the Earth Mover's distance between real and generated distributions.

- Gradient Penalty: Regularizes the discriminator to avoid sharp gradients, helping the model converge more smoothly and preventing issues like vanishing gradients.

- Adversarial Training: The generator and discriminator are trained adversarially, with the generator trying to fool the discriminator and the discriminator trying to differentiate real from fake data correctly.

- Stable Training: WGAN-GP encourages more stable GAN training, especially on complex data like high-resolution images.

Hence, by referring to the above, you can apply novel loss functions in GANs to improve the quality of generated text or images.

Related Post: Custom loss function for a GAN

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP