In order to deploy a trained PyTorch model on AWS Lambda for real-time inference, You can refer to the following steps:

- Prepare your PyTorch model

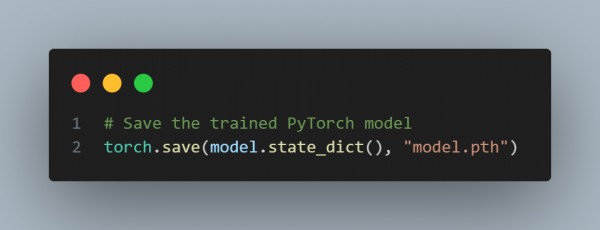

- Save the trained model as a .pth file.

- Create a Lambda Function

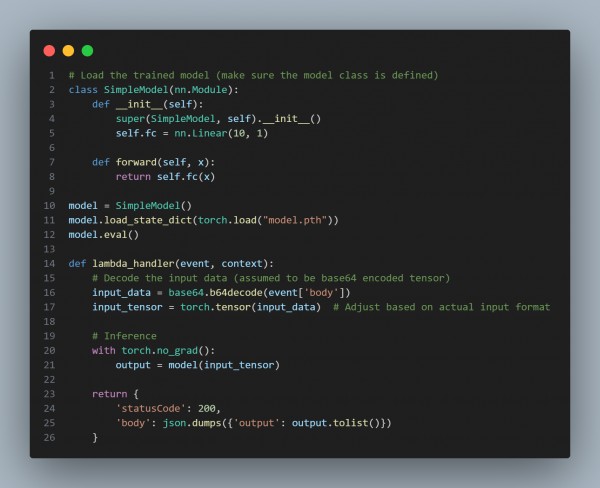

- Create a Python function that loads the model and performs inference.

Here is the code reference for the above steps:

- Package the Lambda Deployment:

- Package the Lambda function and dependencies into a deployment package (ZIP file).

- Include PyTorch and any necessary dependencies, which can be added via a Lambda Layer or bundled into the ZIP.

- Deploy on AWS Lambda:

- Create a Lambda function in the AWS Console.

- Upload the deployment package.

- Set the handler to lambda_function.lambda_handler.

- Test the Lambda Function:

- Invoke the Lambda function through an API Gateway or AWS SDK.

In the above code methods, we are using Model Loading, Which Loads the saved model inside the Lambda function; Inference, which performs inference inside the Lambda function using the model; and API Gateway, which exposes the Lambda function via API Gateway for real-time access.

Hence, this setup allows for efficient real-time inference in AWS Lambda using a trained PyTorch model.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP