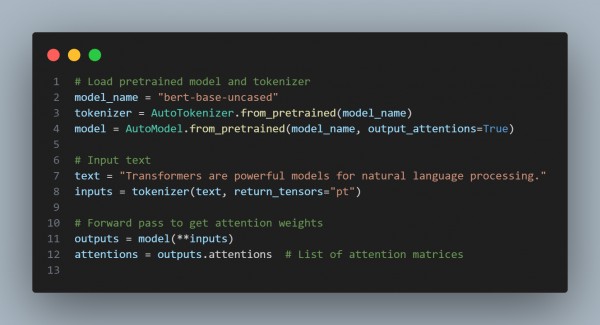

You can refer to the example of visualizing attention weights in a transformer model using Matplotlib and Hugging Face Transformers below:

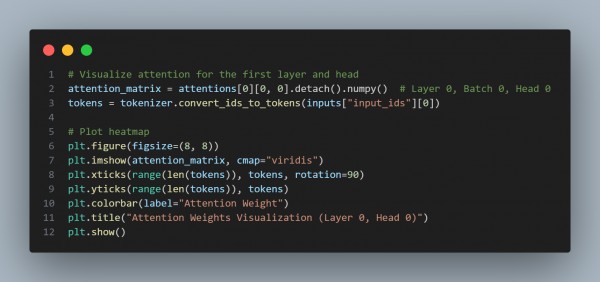

In the above code, we are using Extract Attention Weights, which uses output_attentions=True during model initialization, Token Mapping, which converts token IDs back to tokens for labels on the plot, and Heatmap, which visualizes the attention matrix with tokens on both axes.

Hence, this provides insights into how tokens interact with each other in a transformer layer.

Related Post: Techniques to implement attention-based weighting in image captioning models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP