Sequence masking improves model stability by ensuring that variable-length inputs are processed correctly without allowing irrelevant padding tokens to influence computations. It achieves this by masking out the padding tokens in operations like attention or loss calculation.

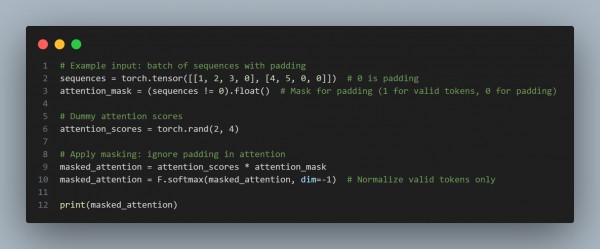

Here is the code snippet using Pytorch:

The above provides benefits in Preventing Noise by ensuring padding does not contribute to predictions, focusing Attention by guiding models to focus only on meaningful parts of input sequences, and Stabilizing Training to reduce the risk of incorrect gradients from padding influence.

Hence, this is how sequence masking improves model stability when dealing with variable-length text.

Related Post: How do I handle prompt fatigue

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP