Effective methods for detecting inappropriate outputs in text generation are as follows:

- Rule-Based Filtering: It uses keyword matching or regex to flag offensive language.

- Toxicity Classifiers: It Utilizes pre-trained classifiers like Perspective API or Hugging Face toxicity models.

- Embedding-Based Similarity: It compares outputs against inappropriate content embeddings using cosine similarity.

- Human-in-the-Loop Review: It is used to Incorporate manual review for edge cases.

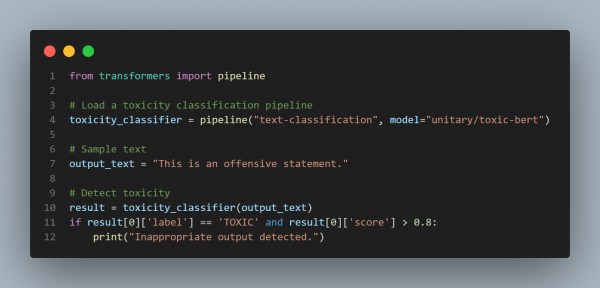

Here is an example of one of the methods: Toxicity Classifiers

This method is efficient for flagging inappropriate content in a model-agnostic way.

Hence, in this way, you can detect inappropriate outputs in text generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP