You can handle batching and padding by using padding tokens and attention masks to handle variable-length sequences efficiently in transformers by referring to below:

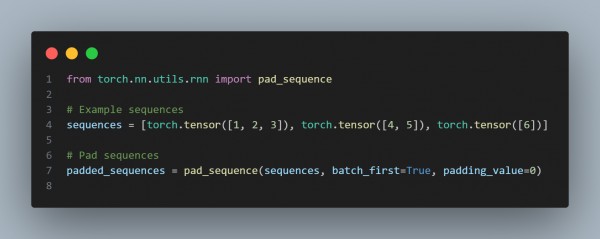

- Use of pad_sequence for Batching and Padding: (torch.nn.utils. run.pad_sequence) Pads a list of the longest sequences in the batch, making it easy to handle variable-length inputs.

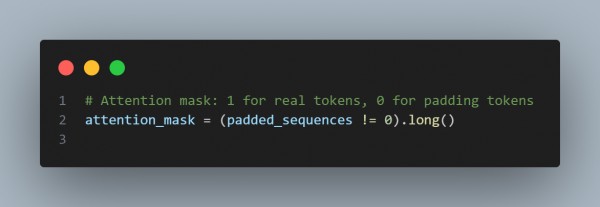

- Creating Attention Masks: You can create an attention mask to inform the transformer which tokens are actual data and which are padding. Padding tokens (usually 0) are marked with 0 in the mask, while real tokens are marked with 1.

In the code above, we have used padding sequences that standardize lengths in a batch. Filling shorter sequences with a padding token (0 here) and an Attention mask helps the transformer ignore padding tokens during attention computation, optimizing computation and memory usage.

These methods are combined in transformer models like BERT or GPT to train and infer variable-length sequences efficiently.

Hence, using these methods, you can enable batching and padding optimization for variable-length sequences in transformers.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP