You can optimize GAN training for high-resolution image generation by following the strategies below:

- Progressive growing: Training on low-resolution images gradually increases the resolution.

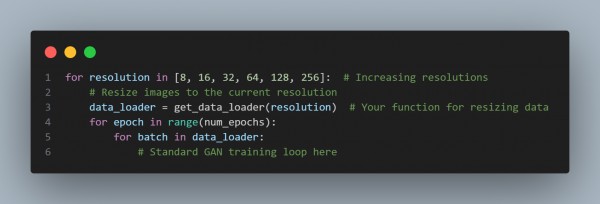

Here is the code below:

In the code above, data_loader helps resize the image you are providing and increases the resolution in stages. This enables the GAN to focus on learning simpler, low-resolution features first before moving to finer, high-resolution details.

- Mixed precision training: You can use mixed precision to save memory and help speed up training.

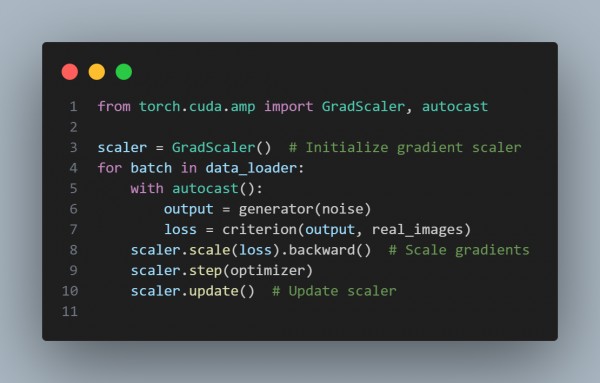

Here is how you can do it:

In the above code, mixed precision training is used, which reduces memory usage and speeds up GAN training by scaling the gradient dynamically.

- Use of Feature Matching Loss: You can stabilize training by matching intermediate features from the discriminator.

- Two-time Scale Update Rule(TTUR): You can set a different learning rate for the generator and discriminator to achieve faster convergence.

- Pixel Normalization: Normalize pixel values layer-by-layer in the generator to improve stability.

By using these strategies, you can optimize the stability, efficiency, and high resolution of the images in GAN.

Related Post: Techniques to reduce low-quality GAN samples in early training

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP