To effectively address issues with LLM-specific metrics in the Google Cloud Vertex AI Evaluation Framework, ensure that you have the necessary permissions, enable the required APIs, and configure the evaluation service to utilize appropriate judge models like Gemini.

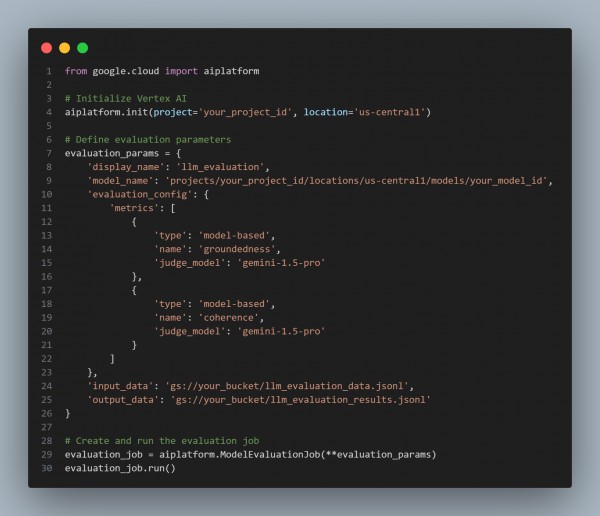

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Initialization: Sets up the Vertex AI environment with the specified project and location.

- Evaluation Parameters:

- Specifies the display name and model to be evaluated.

- Defines the evaluation configuration with metrics such as 'groundedness' and 'coherence', utilizing 'gemini-1.5-pro' as the judge model.

- Sets the input and output data paths in Google Cloud Storage.

- Evaluation Job: Creates and executes the model evaluation job with the defined parameters.

Hence, by configuring the evaluation parameters appropriately and utilizing judge models like Gemini, you can effectively address issues related to LLM-specific metrics in the Vertex AI Evaluation Framework.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP