You can fix an embedding mismatch in an AI code reviewer integrated with GitHub Actions by ensuring consistent model-tokenizer pairing, normalizing text inputs, using fixed embedding dimensions, and caching model weights correctly.

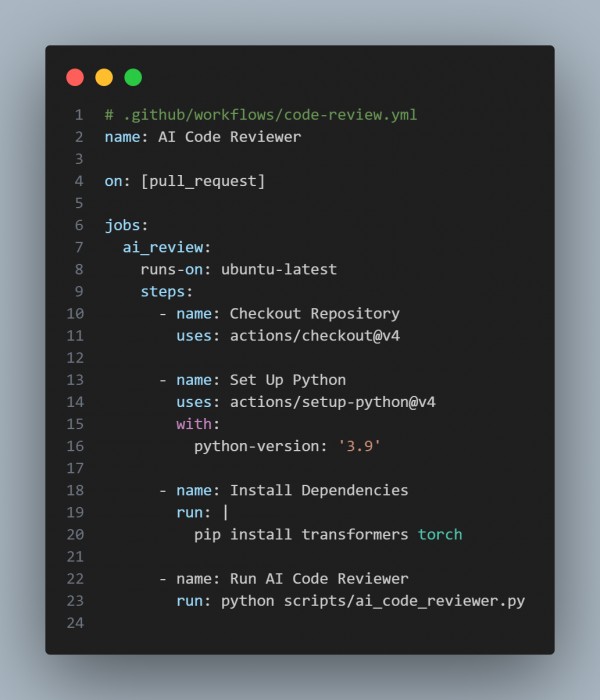

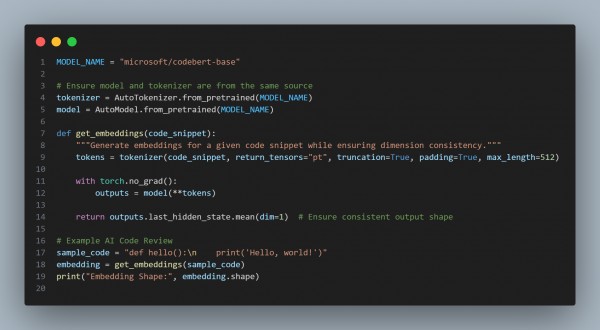

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Consistent Model-Tokenizer Pairing: Uses the same MODEL_NAME for both.

- Text Normalization: Ensures tokens are padded and truncated correctly.

- Fixed Embedding Dimensions: Uses mean(dim=1) to enforce consistent shape.

- GitHub Actions Integration: Automates AI review on pull requests.

- Dependency Caching: Prevents embedding mismatches due to outdated model versions.

Hence, resolving embedding mismatches in an AI-powered GitHub Actions code reviewer requires consistent tokenizer-model pairing, input normalization, and fixed embedding dimensions to ensure reliable and reproducible AI evaluations.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP