You can prevent data leakage in AI-powered IDEs by implementing strict access controls, in-memory processing, secure logging, and masked prompt handling.

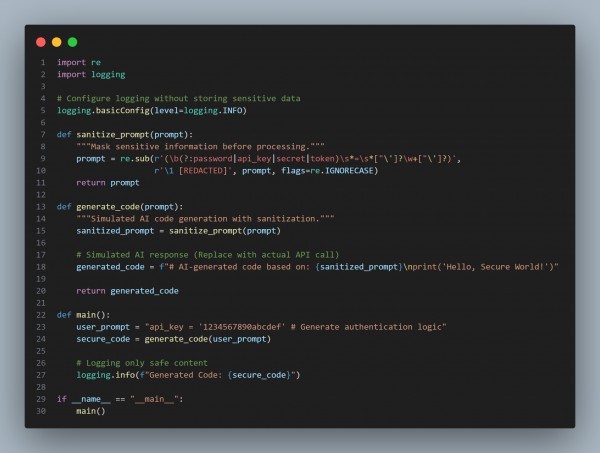

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Sensitive Data Masking: Uses regex to redact API keys, passwords, and secrets.

- In-Memory Processing: Prevents unnecessary data storage.

- Secure Logging: Avoids logging raw user input to prevent leaks.

- Real-Time AI Integration Ready: Simulated AI response with safety in mind

Hence, securing AI-powered IDE integrations requires real-time input sanitization, encrypted processing, and strict logging policies to prevent unintentional data exposure.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP