To deploy fine-tuned models for real-time chatbots efficiently, use model quantization, caching, and asynchronous API calls to balance speed and accuracy.

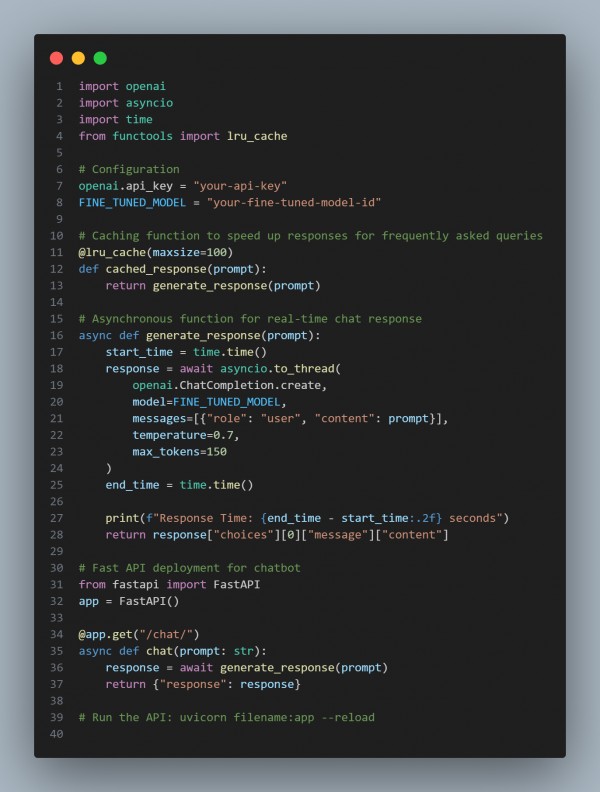

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Asynchronous Processing – Uses asyncio to handle multiple requests concurrently, reducing response latency.

- Response Caching – Implements lru_cache to store frequently requested queries, improving performance.

- Fine-Tuned Model Deployment – Uses OpenAI's fine-tuned model for customized chatbot responses.

- FastAPI Integration – Provides a lightweight, high-performance API for real-time interaction.

- Scalability – Supports large-scale deployment while maintaining low latency.

Hence, deploying fine-tuned models for real-time chatbots with asynchronous execution, caching, and optimized API handling ensures high performance without compromising speed.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP