Yes, LangChain supports multi-turn conversations with prescribed call-script functionality for interactive coding assistants.

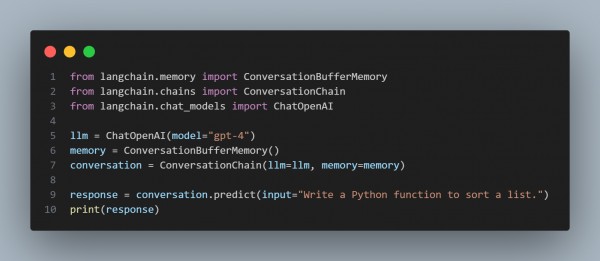

Here is the code snippet you can refer to:

In the above code, we are using the following key approaches:

- Memory Management: Stores conversation context for smooth multi-turn interactions.

- Customizable Call-Scripts: Enables structured dialogues for coding assistance.

- Integration with APIs: Supports tools like OpenAI, Anthropic, and local models.

- Chain & Agent Support: Allows for dynamic and reactive workflows.

- Prompt Engineering: Enhances interactions with structured templates.

Hence, LangChain is an effective framework for building structured, multi-turn coding assistants with prescribed dialogue flows.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP