If a GAN produces low-quality outputs despite fine-tuning, you can follow the following:

- Review Data Preprocessing: Ensure proper normalization and augmentation of real-world data.

- Enhance Model Architecture: Use advanced layers like residual blocks or attention mechanisms.

- Adjust Training Dynamics: Balance generator and discriminator updates to avoid overfitting or underfitting.

- Regularization: Add gradient penalties (e.g., WGAN-GP) or noise to stabilize training.

- Increase Training Data Quality/Quantity: Include more diverse and high-quality samples.

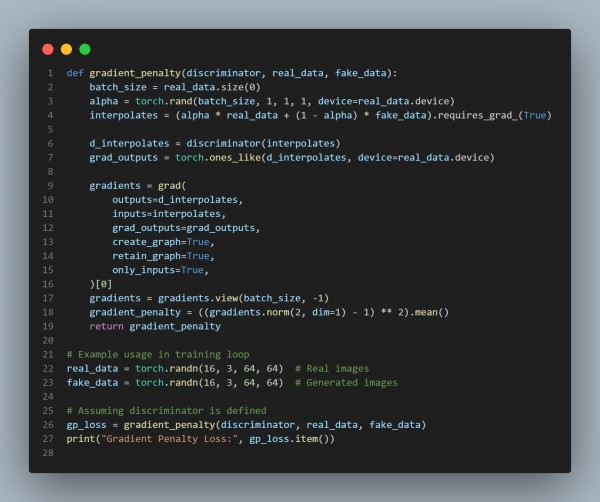

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Gradient Penalty: Stabilizes training by penalizing non-smooth discriminator gradients.

- Architecture Improvements: Helps the GAN learn more robust representations.

- Data Preprocessing: Ensures the data aligns well with the model's expectations.

Hence, these methods collectively address low-quality outputs and refine the GAN's performance.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP