To handle multi-class imbalances in Conditional GANs (cGANs) while generating realistic images for specific categories, you can follow the following techniques:

- Weighted Loss Function: Assign higher weights to underrepresented classes in the loss function to balance the impact of each class during training.

- Class Balancing in Mini-batches: Ensure that each mini-batch contains a balanced number of samples from each class to avoid bias towards majority classes.

- Conditional Label Smoothing: Apply label smoothing to the conditioning labels to make the model more robust to class imbalances.

- Augmentation: Use data augmentation techniques to generate additional training samples for underrepresented classes.

- Class-specific Discriminators: Use separate discriminators for each class or implement a multi-class discriminator to handle class-wise imbalances more effectively.

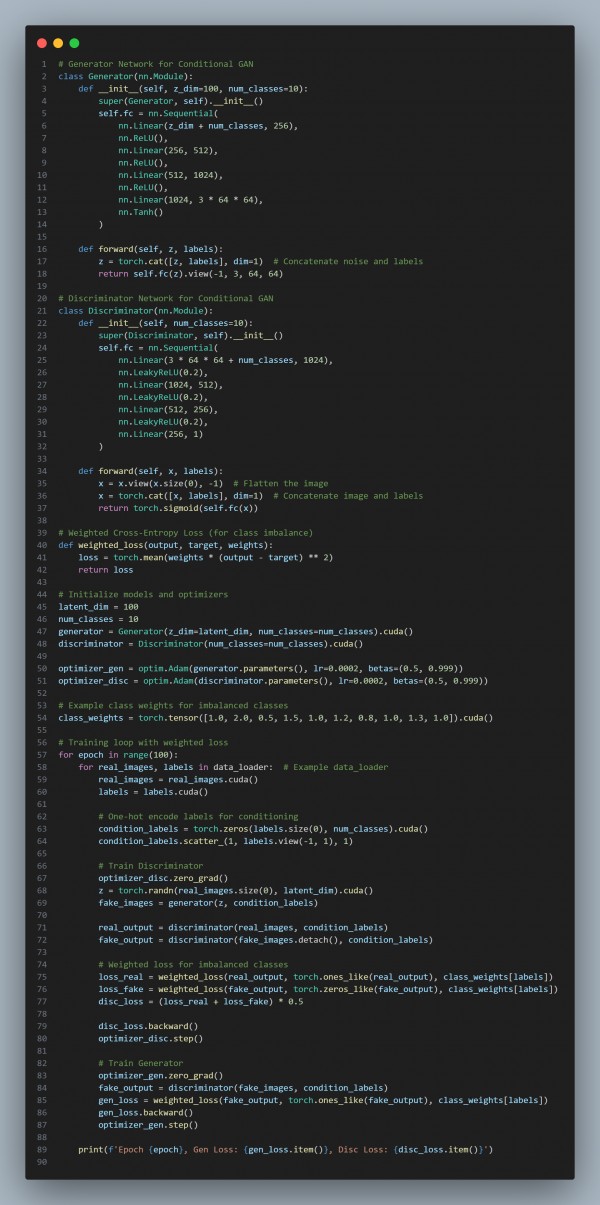

Here are the code snippets you can refer to:

In the above code, we are using the following key points:

- Weighted Loss Function: Assigns higher weights to underrepresented classes, ensuring that the model places more importance on them during training.

- One-Hot Encoding for Conditioning: Uses one-hot encoding of labels to condition the generator and discriminator on specific classes.

- Class-Specific Loss: This applies class-specific loss based on the class weights, which helps mitigate the effects of class imbalance.

- Augmentation and Sampling: This can be enhanced with data augmentation or specific sampling strategies to balance the dataset.

Hence, by referring to the above, you can handle multi-class imbalances in conditional GANs while generating realistic images for specific categories.

Related Post: Methods for balancing the training of a conditional GAN with class labels

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP