WGANs can fail to converge due to issues like improper gradient penalty, insufficient training of the discriminator, or incorrect weight initialization. Ensure the gradient penalty is properly implemented and the discriminator is trained more frequently than the generator.

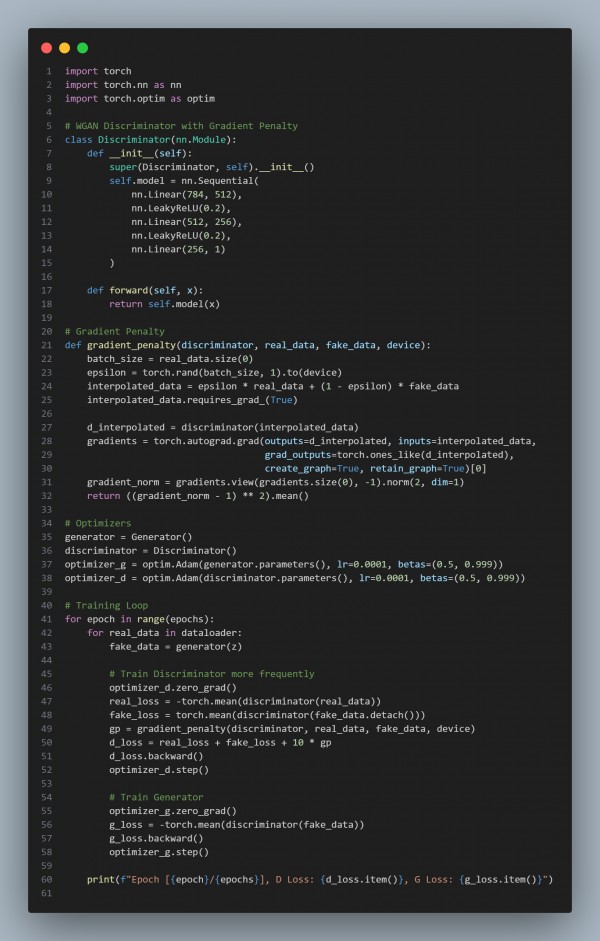

Here is the code reference you can refer to:

In the above code, we are using the following key approaches:

- Gradient Penalty: Ensure the gradient penalty is correctly implemented to enforce the Lipschitz constraint.

- Discriminator Training: Train the discriminator more frequently than the generator to stabilize learning.

- Optimization: Ensure proper weight initialization and use appropriate learning rates for both the generator and discriminator.

Hence, by referring to the above, you can solve your problem.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP