To fix slow inference time with Hugging Face's GPT for large inputs, you can truncate or summarize inputs, you can use a smaller model, enable GPU acceleration, and optimize batch processing.

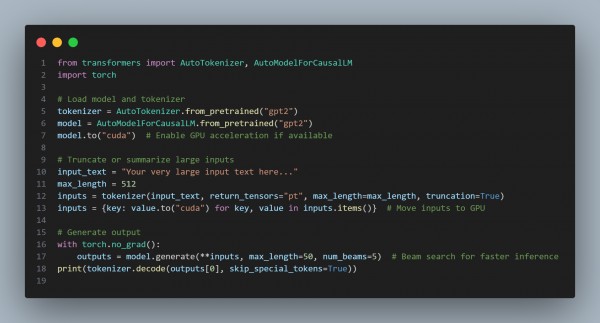

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Truncate Inputs: Reduces input size to the model's maximum length (e.g., 512 tokens) for faster processing.

- GPU Acceleration: Moves the model and inputs to GPU for significantly faster inference.

- Efficient Decoding: Use techniques like beam search or adjust max_length to balance speed and output quality.

Hence, by referring to the above, you can fix the slow inference time when using Hugging Face's GPT for large inputs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP